Buzzword or not, Big Data is not a passing fad or something that businesses can ignore.

At first glance the Big Data concept seems self-explanatory. Big Data, as the name suggests, is surely all about very large quantities of data.

Big Data certainly involves vast quantities of data, usually in the region of petabytes and exabytes. But the size of the data set is just one facet of Big Data.

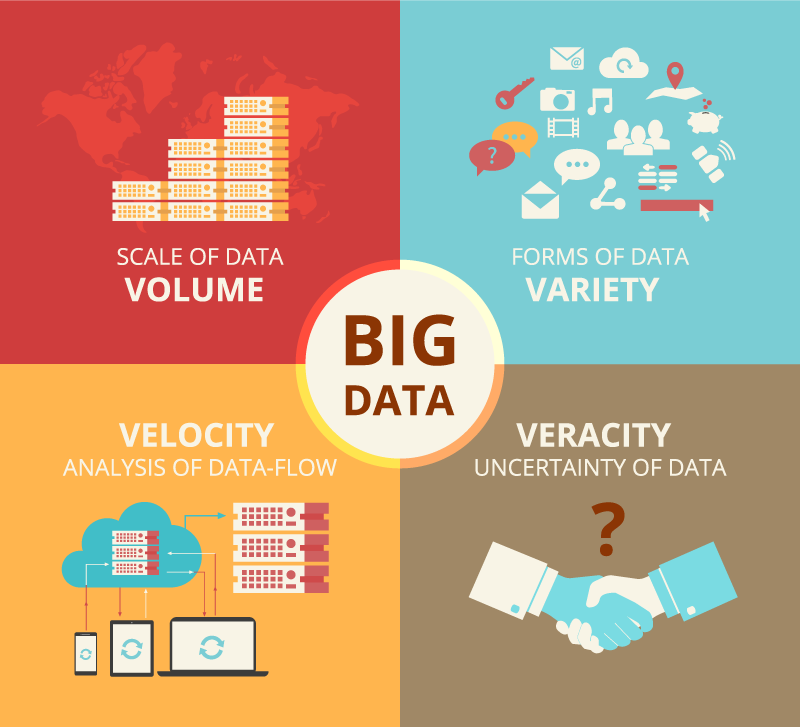

Characteristics of Big Data

In 2001 Douglas Laney began the process of defining the emerging concept of Big Data. He did so by identifying three defining characteristics of Big Data:

- Volume

- Velocity

- Variety

- Veracity*

*Laney originally posited 3 characteristics but over time other ‘Vs’ have been added. Here we include the extra characteristic of Veracity

Volume

Volume refers to the quantity of data generated and stored by a Big Data system.

Volume refers to the quantity of data generated and stored by a Big Data system.

Here lies the essential value of Big Data sets – with so much data available there is huge potential for analysis and pattern finding to an extent unavailable to human analysis or traditional computing techniques.

Given the size of Big data sets, analysis cannot be performed by traditional computing resources. Specialized Big Data processing, storage and analytical tools are needed. To this end, Big Data has underpinned the growth of cloud computing, distributed computing and edge computing platforms, as well as driving the emerging fields of machine learning and artificial intelligence.

In dealing with these large volumes of data, the big data computing system needs also to be able to reliably detect critical and/or missing data – see Veracity below.

Variety

The Internet of Things is characterized by a huge variety of data types. Data varies in its format and the degree to which it is structured and ready for processing.

The Internet of Things is characterized by a huge variety of data types. Data varies in its format and the degree to which it is structured and ready for processing.

With data typically accessed from multiple sources and systems, the ability to deal with variability in data is an essential feature of Big Data solutions. Because Big Data is often unstructured or, at best, semi-structured one of the key challenges is the task of standardizing and streamlining data.

Products like Open Automation Software specialise in smoothing out your big data by rendering data in an open format ready for consumption by other systems.

Velocity

The growth of global networks and the spread of the Internet of Things in particular means that data is being generated and transmitted at an ever increasing pace.

The growth of global networks and the spread of the Internet of Things in particular means that data is being generated and transmitted at an ever increasing pace.

Much of this data needs to be analyzed in real time so it is critical that systems are able to cope with the speed and volume of data generated.

Systems must be robust and scalable and employ technologies specifically designed to protect the integrity of high speed and realtime data. handle the rate such as advanced caching and buffering technologies.

Big Data systems rely on networking features that can handle huge data throughputs while maintaining the integrity of real time and historical data.

Veracity

Data quality and validity are essential to effective Big Data projects. If the data is not accurate or reliable than the expected benefits of the Big Data initiative will be lost. This is especially true when dealing with realtime data. Ensuring the veracity of data requires checks and balances at all points along the Big Data collection and processing stages.

Data quality and validity are essential to effective Big Data projects. If the data is not accurate or reliable than the expected benefits of the Big Data initiative will be lost. This is especially true when dealing with realtime data. Ensuring the veracity of data requires checks and balances at all points along the Big Data collection and processing stages.

Complexity can be reduced through automated systems. An example of this is Open Automation Software’s One Click automated setup feature that can quickly scan a server and automatically configure huge numbers of data tags without the risk of human error.

Accurate queuing and buffering of data, timestamping and and the use of the most efficient communications protocols go a long way to ensuring the veracity of data.